Going to the doctors’ or the school nurse to have a needle stuck in your arm, is typically a painful childhood memory most tend to have. For those who travel or require regular flu jabs, it could even be a reoccurring memory. However, how much thought goes into the science behind those sharp needles? What are known as vaccinations are available on the NHS and can protect an individual against a multitude of diseases, including diphtheria, tetanus, whooping cough, polio, Hib, hepatitis B, pneumococcal, rotavirus, meningococcal, measles, mumps, rubella and HPV. Protecting against these diseases not only relieves strain on the NHS, it also saves lives. So why do some choose not to vaccinate?

In 1998, the Lancet Medical Journal published the findings of Dr Andrew Wakefield, who had conducted research that proposed a link between the MMR vaccine (protecting against measles, mumps and rubella) and autism. This sparked global panic, with a dramatic drop in MMR vaccination rates, which inevitably caused a rise in measle cases. Not only did this “doctor” conduct unnecessary, invasive tests on children, without ethical approval or appropriate qualifications, the General Medical Council discredited the entire study, due to lack of medical basis.

Despite repeated research, including an extensive review, conducted by the World Health Organisation showing no evidence for a link between autism and the MMR vaccine, the anti-vax movement remains strong. Internet propaganda spouts arguments against vaccinations, yet these arguments are mainly built upon myths. These myths include vaccines being made using aborted fetal tissue, vaccines contain mercury, and the idea that vaccinations are dangerous. Numerous and extensive academic studies and medical research have disproven these myths. This is alongside proving that any side effects from vaccinations are mild and short-lived. Any severer adverse reactions are incredibly rare; however, doctors and nurses are trained to treat these.

It could be argued that the choice to vaccinate is a personal one. The basis of this argument is flawed in many ways. The first being that the fate of a young child isn’t their personal choice, it’s down to the parent’s own belief of what they think is best. It could be argued that the child’s right to safety and good health is being stripped from them, before they are old enough and informed enough to make their own choices. By the time the child is ready to decide for themselves, it could be too late. Does this mean that parents have a moral obligation to vaccinate their children?

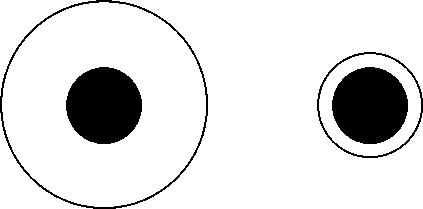

This is also an argument that the choice to vaccinate isn’t a personal one, because of the dangers unvaccinated individuals can pose. The common idea is that if vaccinations are so effective, others aren’t at risk if someone was to chose not to vaccinate their kids. On the contrary, there are many children who would be at risk, such as those who are currently too young to be vaccinated. This would be alongside individuals, young and old, who are unable to be vaccinated, due to immune system problems, such as cancer patients. Parents have also claimed that if they didn’t vaccinate their child, but their child became ill, they could keep them home from nursery/school, to avoid infecting others. This option is also unsuitable, as those infected are often contagious before symptoms can properly begin.

It can be easy to underestimate the importance of vaccinations, thus underestimating the dangers of the anti vax movement. Vaccination remains one of the easiest ways to stay healthy, protecting against serious illnesses, which still poses a very real threat. Vaccine-preventable diseases, such as measles, mumps and whooping cough still results in hospitalisations and death every year. This is largely because these diseases are more common in other countries, meaning children can be infected by travellers, or by travelling themselves. Ultimately, a reduction in vaccination rates could result in an epidemic, where diseases which are virtually eradicated, such as Meningitis C, can return with vengeance.

Yes, many may have their fears about the dangers of vaccinations. Yes, many may believe they are unnecessary and invasive. Yes, many may believe it’s safer not to vaccinate. Yet, you are at a far, far greater risk, by choosing not to vaccinate. Not only are you at a greater risk of severe infections, which could result in death, but you could also be neglecting your moral duties. As individuals, we all have a public health commitment to protect our families, friends and communities, and the only way to sufficiently do this, is to vaccinate.

https://www.verywellhealth.com/anti-vaccine-myths-and-misinformation-2633730