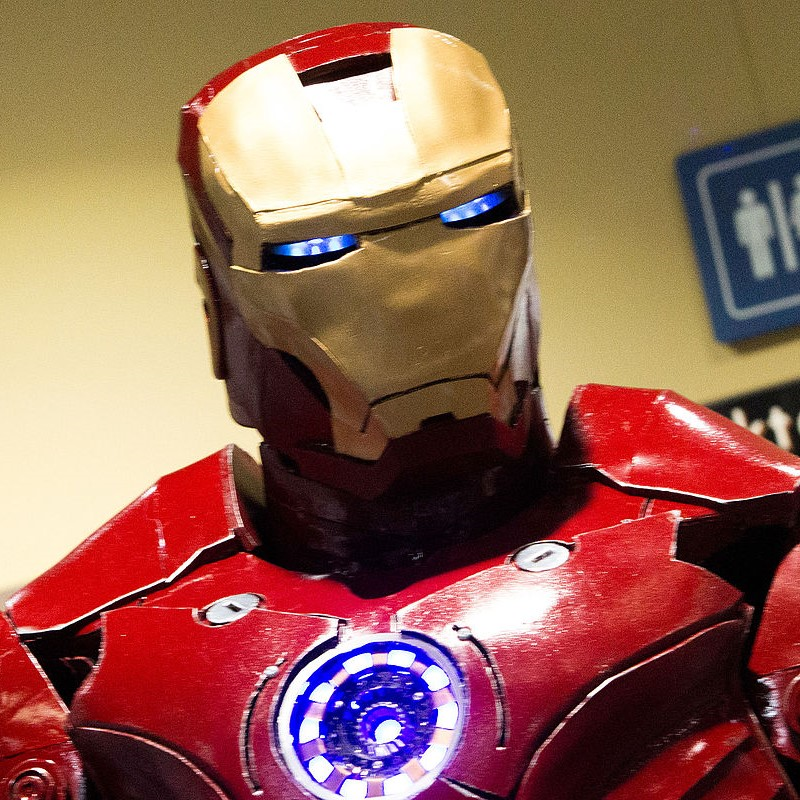

With the shadow of Infinity War looming over our heads, and the role model status I currently place in Iron Man’s hands, I wanted to find out if our (my) favourite genius billionaire playboy philanthropist could actually exist. The following issues concerned are mostly from the films (with fewer references to the comics).

Arc reactor-

From the comics and films, we know the arc reactor in Tony’s chest is a small fusion reactor built into him and runs on palladium, for which the isotope evidently matters due to its fusion properties. Moving past the fact the continuous collision of particles and subsequent emission of beta and gamma rays would cause serious damage to Tony’s health, if this power source could produce the same amount of energy as a full-sized reactor then it is entirely possible that it could power the suit. However, the physics of building a small scale nuclear reactor with a ring of electromagnets, heat containment and electron recovery into someone’s body is still far on the horizon. The arc reactor would also need to store its power, because the amount of power needed when Tony is and isn’t wearing the suit would be greatly varied. In Iron Man 2, Tony is seen to be quickly burning through his arc reactors which suggests that the power output from them can be controlled based on if he’s fighting or just going about daily life, even though it’s usually the former.

Flying suit-

Iron Man has 4 visible thrusters, one on each of his hands and feet. However, when he flies horizontally in the films there is nothing to oppose the downward force of gravity so he should continue to move horizontally but fall in altitude as he did. He usually combats this by flying in a parabolic or slightly ascending path or with his arms outstretched to mimic a plane, where the pressure difference above and below his arms would generate lift. Next, the 4 thrusters would need to not only generate enough lift for Tony’s weight but also heavy objects like cars or an aircraft carrier which he has been known to lift while flying (see: The Avengers (2012)). This means the thrusters must be able to lift up to 100,000 tonnes (100 million kg) based on the Nimitz Class, the world’s largest aircraft carrier, which is not impossible given that we’re assuming the arc reactor works to power the suit.

Jarvis (or Friday, his badass Irish counterpart)-

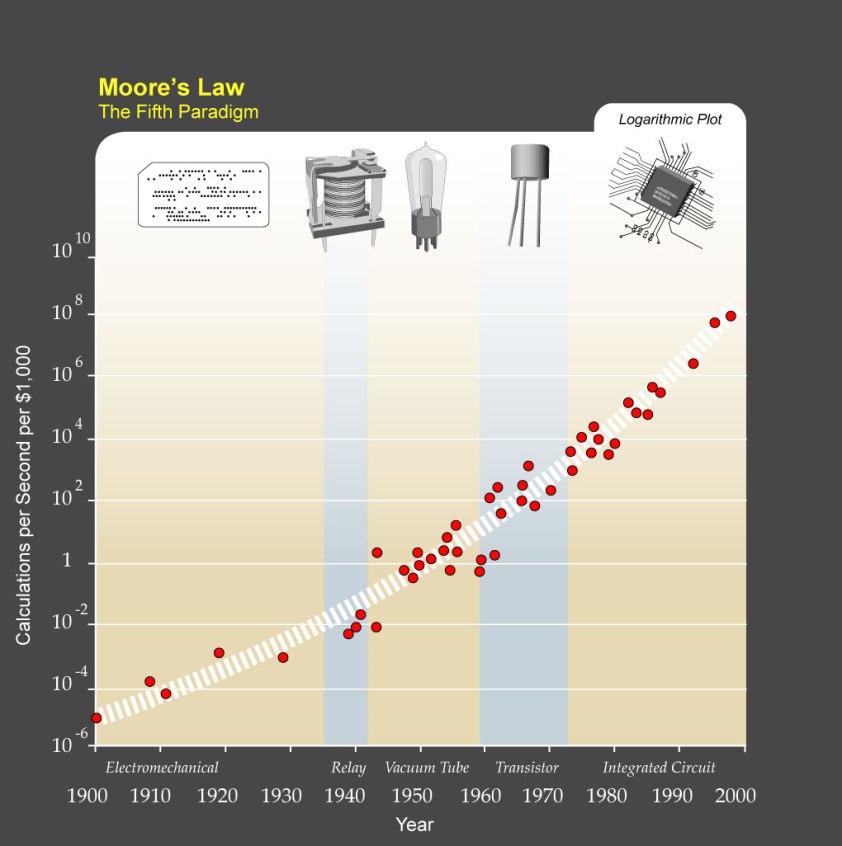

Considering Jarvis is basically a better version of Alexa this is completely within reach. He can do facial recognition, use the Power of The Internet, listen to all your problems and do all the heavy brainpower lifting like calculating the results of different scenarios that could occur. He is peak AI technology which he proves when he is able to hide from Ultron by uploading himself into the internet. Again, this is possible, definitely in the near future.

Ammo firing-

The chest ray the suit is capable of firing is made up of photons from the reactor so is also possible. This is because the arc reactor can produce photons during the atom collision process, and as mentioned, the only issue is storing them. He has guns built into his arms and shoulders, like many fighter planes. One issue that could arise is how he could fire these while flying without experiencing the momentum in the opposite direction to make him fly backwards, however the guns themselves are reasonably realistic in terms of being able to build into a suit.

Suit features: calling from afar, self-fitting, freeze prevention-

In Iron Man 3, Tony develops the suit so that he can call it from miles away to fly to him and it then fits on his body by itself. Breaking this down, the first part of this problem is calling the suit, which obviously isn’t possible via Bluetooth or even radio considering the distances involved. A reasonable solution, since it isn’t listed how he manages to do this, could be through the use of satellite signalling like how a phone call works. He also manages to fix the freezing problem mentioned in Iron Man 2 by using a “gold-titanium alloy from an earlier satellite design” as mentioned in a comic. The next issue is the self-fitting suit which is completely possible through the use of an algorithm since the suit is tuned to his shape. Put simply, the suit knows to first secure the feet, then clasp the legs and so on.

Depending on how you choose to rank these issues, this shows that a suit like Iron Man’s isn’t completely out of reach and that the parts of the suit that don’t defy the laws of physics are amazing feats of technology even if they are just special effects for now.

Further Reading:

https://www.wired.com/2008/04/iron-mans-suit-defies-physics-mostly/

http://marvelcinematicuniverse.wikia.com/wiki/Iron_Man_Armor:_Mark_III

Shannon Greaves

Shannon Greaves